When you’ve been questioning when our army would begin deploying artificial intelligence on the battlefield, the reply is that it seems to be already occurring. The Pentagon has been utilizing pc imaginative and prescient algorithms to assist determine targets for airstrikes, based on a report from Bloomberg Information. Only recently, these algorithms have been used to assist perform greater than 85 airstrikes as a part of a mission within the Center East.

The bombings in query, which came about in varied elements of Iraq and Syria on Feb. 2, “destroyed or broken rockets, missiles, drone storage and militia operations facilities amongst different targets,” Bloomberg studies. They have been a part of an organized response by the Biden administration to the January drone attack in Jordan that killed three U.S. service members. The federal government has blamed Iranian-backed operatives for the assault.

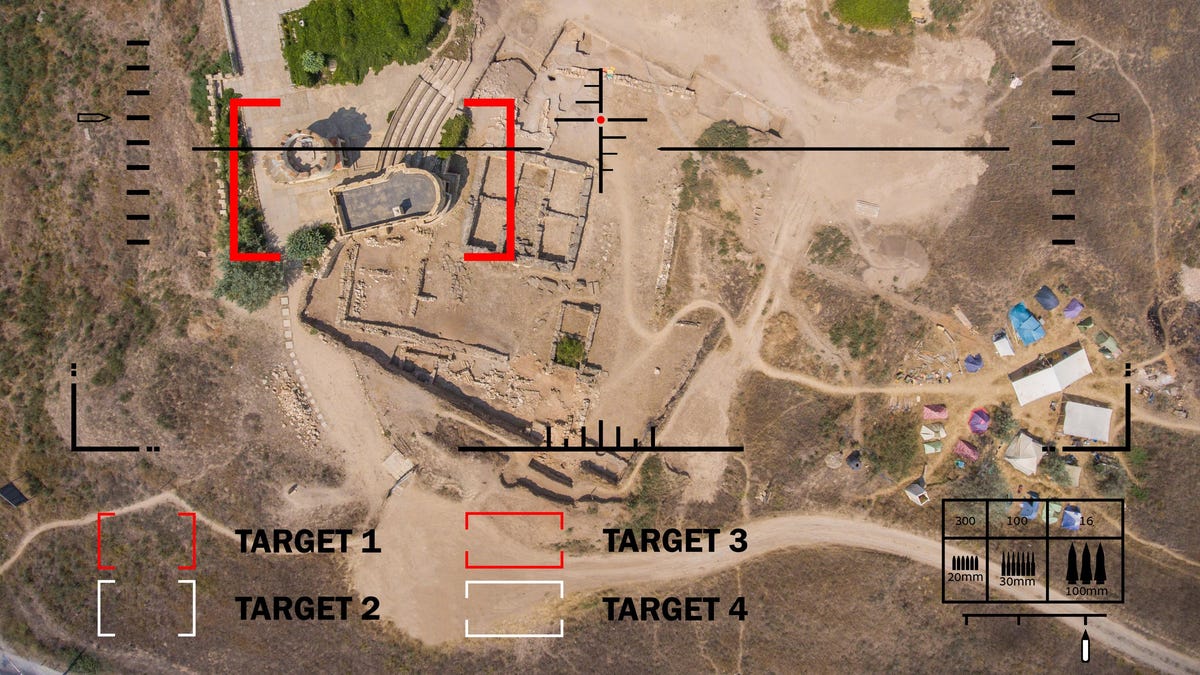

Bloomberg quotes Schuyler Moore, chief expertise officer for U.S. Central Command, who mentioned, of the Pentagon’s AI use: “We’ve been utilizing pc imaginative and prescient to determine the place there is perhaps threats.” Pc imaginative and prescient, as a area, revolves round coaching algorithms to identify particular objects visually. The algorithms that helped with the federal government’s latest bombings have been developed as a part of Project Maven, a program designed to spur new higher use of automation all through the DoD. Maven was initially launched in 2017.

The usage of AI for “goal” acquisition would look like a part of a rising development—and an arguably disturbing one, at that. Late final 12 months it was reported that Israel was using AI software to decide where to drop bombs in Gaza. Israel’s program, which it has dubbed “The Gospel,” is designed to compile huge quantities of information after which suggest a goal to a human analyst. That “goal” might be a weapon, a car, or a dwell human. This system can counsel as many as 200 targets in a interval of simply 10-12 days, Israeli officers have mentioned. Israelis additionally say that the concentrating on is simply the primary a part of a broader evaluate course of, which may contain human analysts.

If utilizing software program to determine bombing targets would appear like a course of liable to horrible errors, it’s value mentioning that, traditionally talking, the U.S. hasn’t been significantly good at determining the place to drop bombs, anyway. When you’ll recall, earlier administrations have been liable to confuse wedding parties with terrorist HQs. You would possibly as properly let a software program program make these sort of calls, proper? It looks as if there might be much less human culpability that method. If the mistaken individual will get killed otherwise you bomb a drugs manufacturing facility as a substitute of a munitions stockpile, you’ll be able to at all times simply blame it on a software program glitch.

Trending Merchandise